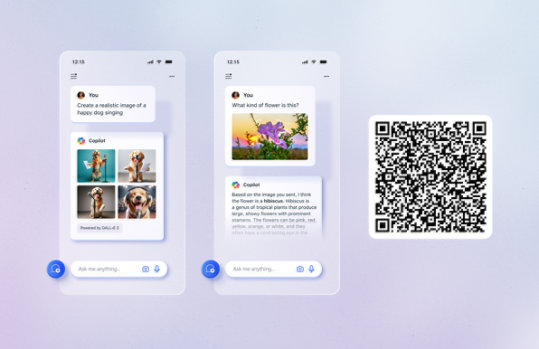

Unlock your potential with Microsoft Copilot

BizTalk Server 2006 R2 Retired Technical documentation

The content you requested has already been retired. It is available to download on this page.

Important! Selecting a language below will dynamically change the complete page content to that language.

Version:

2006

Date Published:

1/17/2018

File Name:

BizTalk_Server_2006_R2.pdf

File Size:

251.7 MB

No application is an island. Whether we like it or not, tying systems together has become the norm. Yet connecting software is about more than just exchanging bytes. As organizations move toward a service-oriented world, the real goal—creating effective business processes that unite separate systems into a coherent whole—comes within reach. Microsoft BizTalk Server 2006 supports this goal. Like its predecessors, this latest release allows connecting diverse software, then graphically creating and modifying process logic that uses that software. BizTalk Server also enables information workers to monitor running processes, interact with trading partners, and perform other business-oriented tasks.Supported Operating Systems

Windows 10, Windows 10 Tech Preview, Windows 2000, Windows 2000 Advanced Server, Windows 2000 Professional Edition , Windows 2000 Server, Windows 2000 Service Pack 2, Windows 2000 Service Pack 3, Windows 2000 Service Pack 4, Windows 3.1, Windows 3.11, Windows 7, Windows 7 Enterprise, Windows 7 Enterprise N, Windows 7 Home Basic, Windows 7 Home Basic 64-bit, Windows 7 Home Premium, Windows 7 Home Premium 64-bit, Windows 7 Home Premium E 64-bit, Windows 7 Home Premium N, Windows 7 Home Premium N 64-bit, Windows 7 Professional, Windows 7 Professional 64-bit, Windows 7 Professional E 64-bit, Windows 7 Professional K 64-bit, Windows 7 Professional KN 64-bit, Windows 7 Professional N, Windows 7 Professional N 64-bit, Windows 7 Service Pack 1, Windows 7 Starter, Windows 7 Starter 64-bit, Windows 7 Starter N, Windows 7 Ultimate, Windows 7 Ultimate 64-bit, Windows 7 Ultimate E 64-bit, Windows 7 Ultimate K 64-bit, Windows 7 Ultimate KN 64-bit, Windows 7 Ultimate N, Windows 7 Ultimate N 64-bit, Windows 8, Windows 8 Consumer Preview, Windows 8 Enterprise, Windows 8 Pro, Windows 8 Release Preview, Windows 8.1, Windows 8.1 Preview

A PDF viewer- The download is a pdf file. To start the download, click Download.

- If the File Download dialog box appears, do one of the following:

- To start the download immediately, click Open.

- To copy the download to your computer to view at a later time, click Save.