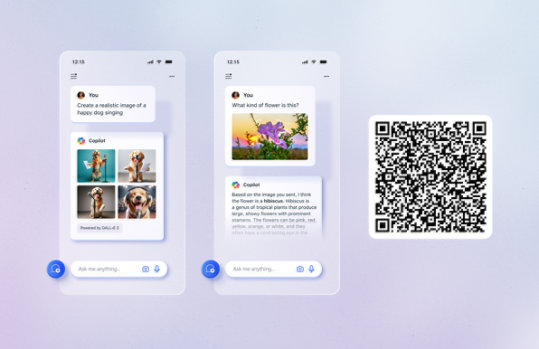

Unlock your potential with Microsoft Copilot

Get things done faster and unleash your creativity with the power of AI anywhere you go.

SQL Server 2000 Retired Technical documentation

The content you requested has already retired. It's available to download on this page.

Important! Selecting a language below will dynamically change the complete page content to that language.

Version:

1.0

Date Published:

5/31/2016

File Name:

SQL2000_release.pdf

File Size:

92.0 MB

Microsoft® SQL Server™ is a relational database management and analysis system for e-commerce, line-of-business, and data warehousing solutions. SQL Server 2000, includes support for XML and HTTP, performance and availability features to partition load and ensure uptime, and advanced management and tuning functionality to automate routine tasks and lower total cost of ownershipSupported Operating Systems

Windows 2000, Windows 98, Windows ME, Windows NT, Windows XP

Windows Server 2003, Standard Edition 1 Standard Edition Windows Server 2003, Enterprise Edition 1 Windows Server 2003, Datacenter Edition 1 Windows 2000 Server Windows 2000 Advanced Server Windows 2000 Datacenter Server Microsoft Windows NT Server version 4.0 with Service Pack 5 (SP5) or later Windows NT Server version 4.0, Enterprise Edition, with SP5 or later- The download is a pdf file. To start the download, click Download.

- If the File Download dialog box appears, do one of the following:

- To start the download immediately, click Open.

- To copy the download to your computer to view at a later time, click Save.